Client

PydanticAI can act as an MCP client, connecting to MCP servers to use their tools.

Install

You need to either install pydantic-ai, orpydantic-ai-slim with the mcp optional group:

pip install "pydantic-ai-slim[mcp]"

uv add "pydantic-ai-slim[mcp]"

Note

MCP integration requires Python 3.10 or higher.

Usage

PydanticAI comes with two ways to connect to MCP servers:

MCPServerHTTPwhich connects to an MCP server using the HTTP SSE transportMCPServerStdiowhich runs the server as a subprocess and connects to it using the stdio transport

Examples of both are shown below; mcp-run-python is used as the MCP server in both examples.

SSE Client

MCPServerHTTP connects over HTTP using the HTTP + Server Sent Events transport to a server.

Note

MCPServerHTTP requires an MCP server to be running and accepting HTTP connections before calling agent.run_mcp_servers(). Running the server is not managed by PydanticAI.

The name "HTTP" is used since this implemented will be adapted in future to use the new Streamable HTTP currently in development.

Before creating the SSE client, we need to run the server (docs here):

deno run \

-N -R=node_modules -W=node_modules --node-modules-dir=auto \

jsr:@pydantic/mcp-run-python sse

from pydantic_ai import Agent

from pydantic_ai.mcp import MCPServerHTTP

server = MCPServerHTTP(url='http://localhost:3001/sse') # (1)!

agent = Agent('openai:gpt-4o', mcp_servers=[server]) # (2)!

async def main():

async with agent.run_mcp_servers(): # (3)!

result = await agent.run('How many days between 2000-01-01 and 2025-03-18?')

print(result.output)

#> There are 9,208 days between January 1, 2000, and March 18, 2025.

- Define the MCP server with the URL used to connect.

- Create an agent with the MCP server attached.

- Create a client session to connect to the server.

(This example is complete, it can be run "as is" with Python 3.10+ — you'll need to add asyncio.run(main()) to run main)

What's happening here?

- The model is receiving the prompt "how many days between 2000-01-01 and 2025-03-18?"

- The model decides "Oh, I've got this

run_python_codetool, that will be a good way to answer this question", and writes some python code to calculate the answer. - The model returns a tool call

- PydanticAI sends the tool call to the MCP server using the SSE transport

- The model is called again with the return value of running the code

- The model returns the final answer

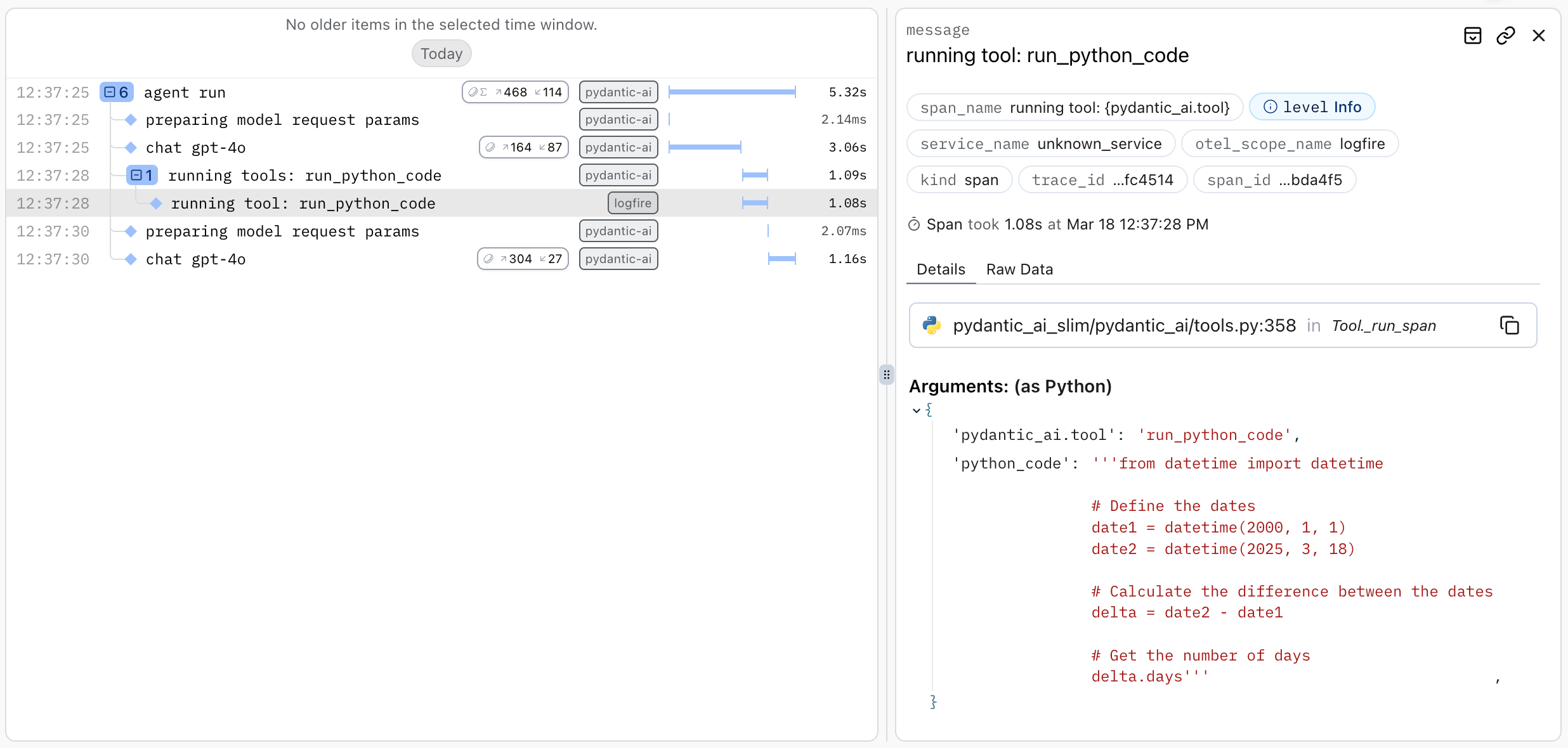

You can visualise this clearly, and even see the code that's run by adding three lines of code to instrument the example with logfire:

import logfire

logfire.configure()

logfire.instrument_pydantic_ai()

Will display as follows:

MCP "stdio" Server

The other transport offered by MCP is the stdio transport where the server is run as a subprocess and communicates with the client over stdin and stdout. In this case, you'd use the MCPServerStdio class.

Note

When using MCPServerStdio servers, the agent.run_mcp_servers() context manager is responsible for starting and stopping the server.

from pydantic_ai import Agent

from pydantic_ai.mcp import MCPServerStdio

server = MCPServerStdio( # (1)!

'deno',

args=[

'run',

'-N',

'-R=node_modules',

'-W=node_modules',

'--node-modules-dir=auto',

'jsr:@pydantic/mcp-run-python',

'stdio',

]

)

agent = Agent('openai:gpt-4o', mcp_servers=[server])

async def main():

async with agent.run_mcp_servers():

result = await agent.run('How many days between 2000-01-01 and 2025-03-18?')

print(result.output)

#> There are 9,208 days between January 1, 2000, and March 18, 2025.

- See MCP Run Python for more information.

Using Tool Prefixes to Avoid Naming Conflicts

When connecting to multiple MCP servers that might provide tools with the same name, you can use the tool_prefix parameter to avoid naming conflicts. This parameter adds a prefix to all tool names from a specific server.

How It Works

- If

tool_prefixis set, all tools from that server will be prefixed with{tool_prefix}_ - When listing tools, the prefixed names are shown to the model

- When calling tools, the prefix is automatically removed before sending the request to the server

This allows you to use multiple servers that might have overlapping tool names without conflicts.

Example with HTTP Server

from pydantic_ai import Agent

from pydantic_ai.mcp import MCPServerHTTP

# Create two servers with different prefixes

weather_server = MCPServerHTTP(

url='http://localhost:3001/sse',

tool_prefix='weather' # Tools will be prefixed with 'weather_'

)

calculator_server = MCPServerHTTP(

url='http://localhost:3002/sse',

tool_prefix='calc' # Tools will be prefixed with 'calc_'

)

# Both servers might have a tool named 'get_data', but they'll be exposed as:

# - 'weather_get_data'

# - 'calc_get_data'

agent = Agent('openai:gpt-4o', mcp_servers=[weather_server, calculator_server])

Example with Stdio Server

from pydantic_ai import Agent

from pydantic_ai.mcp import MCPServerStdio

python_server = MCPServerStdio(

'deno',

args=[

'run',

'-N',

'jsr:@pydantic/mcp-run-python',

'stdio',

],

tool_prefix='py' # Tools will be prefixed with 'py_'

)

js_server = MCPServerStdio(

'node',

args=[

'run',

'mcp-js-server.js',

'stdio',

],

tool_prefix='js' # Tools will be prefixed with 'js_'

)

agent = Agent('openai:gpt-4o', mcp_servers=[python_server, js_server])

When the model interacts with these servers, it will see the prefixed tool names, but the prefixes will be automatically handled when making tool calls.